SOTA Code Retrieval with Efficient Code Embedding Models — https://www.qodo.ai/blog/qodo-embed-1-code-embedding-code-retreival/

#HackerNews #SOTA #Code #Retrieval #Code #Embedding #AI #Technology #Machine #Learning

SOTA Code Retrieval with Efficient Code Embedding Models — https://www.qodo.ai/blog/qodo-embed-1-code-embedding-code-retreival/

#HackerNews #SOTA #Code #Retrieval #Code #Embedding #AI #Technology #Machine #Learning

#AI and #RAG - Learning the basics: What exactly is an #embedding and how to use them in #MariaDB?

https://www.youtube.com/watch?v=XkB2DLK60JU

GitHub - lancedb/lancedb: Developer-friendly, serverless vector database for AI applications. Easily add long-term memory to your LLM apps! https://github.com/lancedb/lancedb #persistence #OpenSource #embedding #database #GitHub #search #vector #ai

初めてのAI開発!ワクワクしながら作った問い合わせ対応チャットボット

https://qiita.com/SatoRyota_zvc/items/c5d647f5174ca8136bcb?utm_campaign=popular_items&utm_medium=feed&utm_source=popular_items

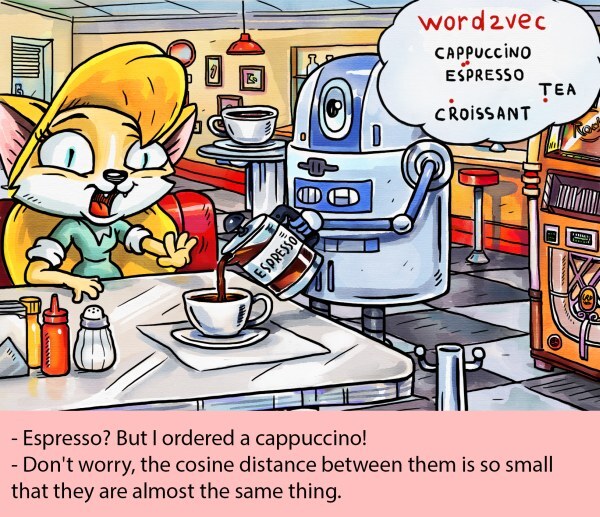

I’m excited to share my newest blog post, "Don't sure cosine similarity carelessly"

https://p.migdal.pl/blog/2025/01/dont-use-cosine-similarity

We often rely on cosine similarity to compare embeddings—it's like “duct tape” for vector comparisons. But just like duct tape, it can quietly mask deeper problems. Sometimes, embeddings pick up a “wrong kind” of similarity, matching questions to questions instead of questions to answers or getting thrown off by formatting quirks and typos rather than the text's real meaning.

In my post, I discuss what can go wrong with off-the-shelf cosine similarity and share practical alternatives. If you’ve ever wondered why your retrieval system returns oddly matched items or how to refine your embeddings for more meaningful results, this is for you!

`

I want to thank Max Salamonowicz and Grzegorz Kossakowski for their feedback after my flash talk at the Warsaw AI Breakfast, Rafał Małanij for inviting me to give a talk at the Python Summit, and for all the curious questions at the conference, and LinkedIn.

Damn, this is really cool, but I wish it had a big “pre-requisites” in the readme with “NVIDIA” in it #AI #RAG #Embedding #Documents #Ollama https://github.com/TilmanGriesel/chipper

Coaching 2 workshops this week on AI Design Wins using #CosmosDB for #Embedding and #Vectorization of content in text and audio format. Lots of fun and will record videos to publish for all on YouTube soon. @soliancenet @thetrainingboss

SQLite's Use Of Tcl (2017): I had no idea the database was originally written to be used as a TCL extension. Explains a lot of good things.

https://www.tcl.tk/community/tcl2017/assets/talk93/Paper.html

#via:lobsters #programming #embedding #sqlite #tcl #+

Fine-tuning #embedding models clarifies enterprise semantics, business metrics, and ranking relevance prior to users issuing prompts.

https://thenewstack.io/the-secret-sauce-for-vector-search-training-embedding-models/

The current relevation that LLMs can’t reason is causing a lot of shade&fraud, but it’s not purely true

An LLM could reason, if you gave it a corpus of sentences (in whichever languages) which explicitly and unambiguously described a whole big bag of causal relationships and outcomes and things that happen because other things happen, and general structures such as that described clearly and formally and without any possibility of confusion

The embeddings which result from such a corpus could well work as a reference source of logic or cause or common sense or reason, about lots of things, and the next step would be to make it so that these embeddings are generalisable so that the common sense of the way life is, can be applied widely (again using vector comparison) so that yes it is possible to apply reason to a LLM, the main thing is that there probably isn’t an emphasis on that kind of descriptive and even prescriptive literature in and among the source learning in the first place – there’ll be a lot, there’ll be some, but I don’t think it was emphasised

By introducing it at the RAG level, and then the embeddings migrating back into the future models, I believe it could be possible to emulate a lot of common sense about the world and the way things are, purely through description of such – after all, the embeddings produced from such a block (a very massive block) of description, as vectors, are only numbers, which is what LLMs are really operating on, just vectors, not words, not tokens, just numbers

Consequently my dreams of applying real-world sensor/actuator ways of learning about the real world and building common sense are probably able to be supplanted just by a rigorous and hefty major project of just describing it instead of actually doing it – but the thing to watch would be in the description itself, it’d have to be as detailed and accurate and wide-ranging as the experiential model would be, and this might be where the difficulty lies, people describing common sense in the world would tend to abbreviate, generalise prematurely, miss things out, misunderstand, and above all, they’ll assume a lot #AI #LLM #reasoning #CommonSense #vector #embedding

An interesting bioRxiv preprint was shared on the site (https://x.com/strnr/status/1844105666962579813). The paper describes a model to represent cells from large scale scRNA seq atlases using LLMs. Apart from the novelty value one of the main draws should be the ability to map any dataset with no additional data labelling, model training or fine-tuning onto the existing universal cell embedding. https://www.biorxiv.org/content/10.1101/2023.11.28.568918v2

https://github.com/snap-stanford/UCE

#scRNAseq #embedding #biology #llm

How to rerank documents with Embedding models & similarity calculation in RAG:

https://www.glukhov.org/post/2024/09/reranking-with-embedding-models

Jina Al just released Jina ColBERT v2, a Multilingual Late Interaction Retriever for #Embedding and #Reranking. The new model supports 89 languages with superior retrieval performance, user-controlled output dimensions, and 8192 token-length.

The server test has enabled users who embed content to skirt copyright infringement. However, the 2007 ruling faces another major challenge.

https://www.plagiarismtoday.com/2024/08/08/the-server-test-suffers-a-major-blow/

#Development #Techniques

External, styleable, and scalable SVGs · SVG embeddings that leave little to be desired https://ilo.im/15zn1a

_____

#VectorGraphic #SVG #Embedding #WebPage #WebDev #Frontend #HTML #CSS #CustomProperty

#Development #Pitfalls

YouTube embeds are bananas heavy · Lighter ways to add YouTube videos on your website https://ilo.im/15zdd6

_____

#Video #Youtube #Embedding #WebComponent #ProgressiveEnhancement #WebPerf #WebDev #Frontend #HTML #JavaScript

Gave https://ollama.com/avr/sfr-embedding-mistral a spin but took way to long (+3hours) to generate 5K embeddings on my m3 pro (32gb).. #llm #embedding #ollama

Can #AI understand emotions?

I dove deep into #LLM #embedding models to extract emotional signals in the text, because why not? And yeah, some models capture more than others, but all of them have it to some extent.

Later this week, I am presenting the #ThatConference Open Space " Crafting Intelligent Python Apps with Retrieval-Augmented Generation". If you missed the first time I did it, I would recommend checking it out. It is going to be over lunch on Friday.

https://that.us/activities/5EI62c1gogbMFYMqilkP

#AI #Embedding #RAG #Python

I've created a basic app for searching an aerial photo using text queries. That's right, you can search for "roundabout" or "school playground" on an image of a city and get pretty good results!

Have a play with it here: https://server1.rtwilson.com/aerial - it's set up with an aerial image of Southampton, UK

Under the hood this uses the SkyCLIP model and the Pinecone vector database.